Tracing the AI value chain: the ethical costs of generative AI

When we have secure housing, food and a living wage, we are absolutely responsible for our decision to endorse or refuse technology products that depend on the perpetuation of mass ethics violations.

It’s beyond doubt that there are questionable ethics associated with generative AI. We’ve all heard it: bottles of water per prompt, shady deals with massive publishers, “accidentally deleted” data files, weird chat logs containing other people’s information, and most chillingly of all, confidante chatbots coaching users towards suicide.

But it’s also quite overwhelming to try to make sense of some of these stories, and the GenAI products do have some handy uses. As a result, many adopters promote the concept of “Responsible AI”.

I’m not on board, of course. To me, the suggestion that someone could “use generative AI responsibly” today is about as silly as suggesting someone could poison a water supply responsibly.

One of the ways people justify this position is by focusing only on the ethics of what happens when they use GenAI. Of course, in order for us to be able to use a GenAI product, someone has to develop and distribute that product. All of these actions, and their ethical implications, connect to and amplify one another.

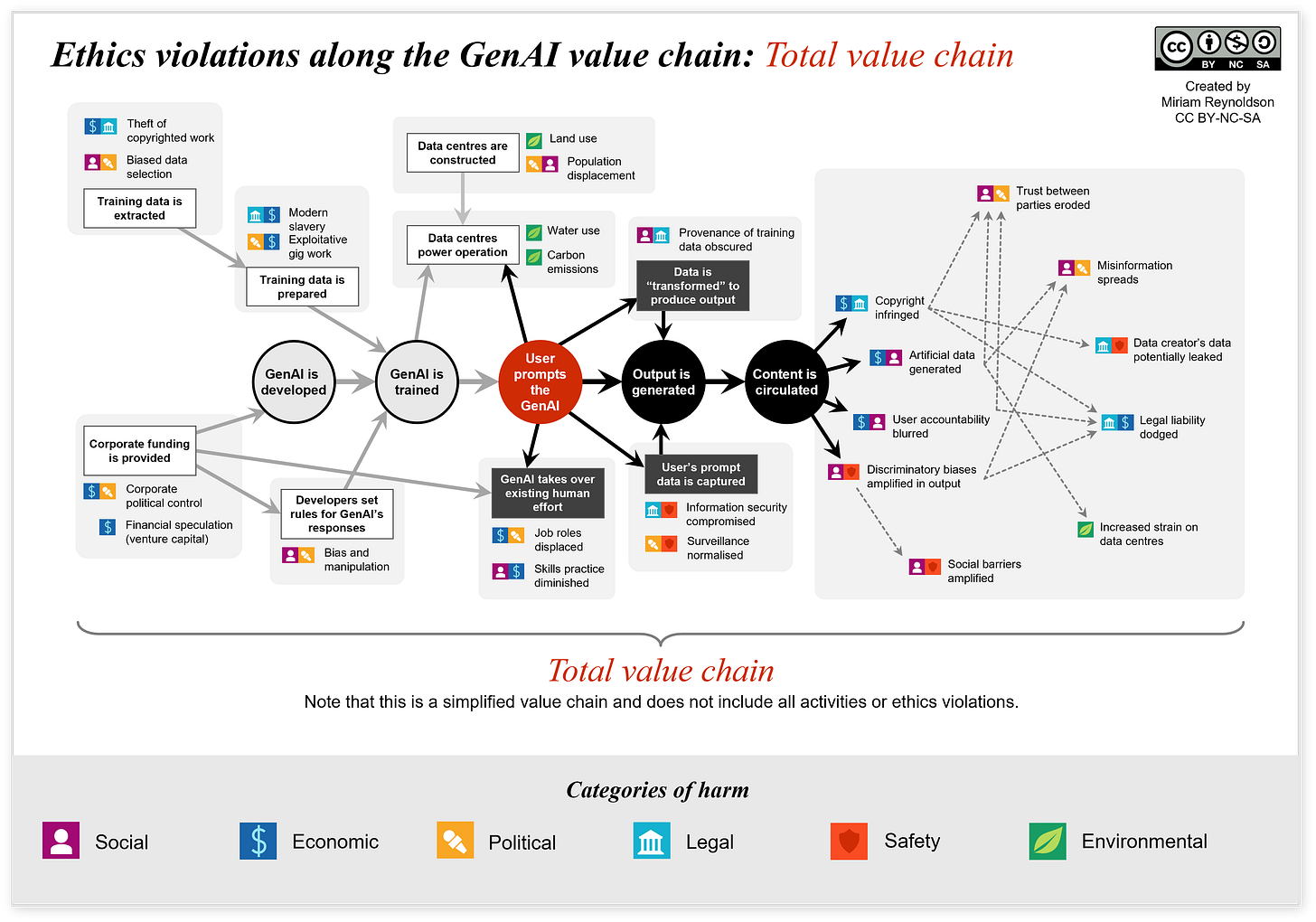

That’s why I wanted to share this chart.1

I’ve used the concept of a value chain, which comes from business theory (specifically from the work of Michael Porter). A traditional value chain describes the processes through which a product is created (upstream) and then the processes by which the product flows to consumers (downstream). So:

upstream activities would include things like designing the product, sourcing materials, transporting and assembling parts

downstream activities would include distributing goods, wholesale and retail sale.

Why a value chain?

The idea is that these activities all contribute to the ultimate aim of generating value through a product. When it comes to GenAI, some positive value is certainly being created (though of course it still falls well short of being profitable for either developers or users). I wanted instead to focus on the costs — that is, the ethical costs to people and planet of developing and using GenAI products.

I’ve based the GenAI value chain around the act of a user’s prompt. Upstream activities are those that occur before the user prompts the AI, and downstream activities occur as a result of the prompt. And every one of them produces a spate of ethics violations, often compounding and cascading from each other.2

Can we disentangle?

The first time I tried to explain this was about a year ago, in a guest post I wrote for Needed Now in Learning and Teaching. In it, I responded to Tim Fawns’ notion that working with AI is an “entanglement” between us and the technology. I suggested that it was “not impossible" to detangle the chain”. Tim gently argued (as is his way) that we couldn’t detangle, but we could, perhaps, trace the tangle.

Of course, Tim is for the most part right. We can’t pick an ethical thread to straighten out — sweatshop data labelling, to choose at random — and claim to be immune to ethical liability for it.

The truth is that opting out is the right thing to do, but that it’s not enough.

These harms are stemming from the choices of people far more resourced and far more powerful, on a vast scale. If we choose to benefit from them, we become complicit, but opting out won’t stop the harm.

“Responsible AI” is supposed to be not only about using GenAI ethically, but about developing it ethically.

That’s no small order, because we have to go back — all the way back to the very beginning. We can’t use the money or the datasets that are already in circulation.

If we truly want to practice Responsible AI, we have to start again. And we have to make better decisions about:

who funds development

where data comes from

how creators are compensated

where and why data centres are built

how data centres are powered

the choices made about when and why GenAI should displace human effort

the transparency of transformer algorithms

the labelling of AI-generated content

the legal accountability of GenAI developers and users

the social barriers created and amplied by AI-generated output.

I’ve chosen instead to advocate for defunding and decommissioning GenAI. Just my personal position, but the above is also a genuine option — if we decide it’s worth it.

If you found this tool helpful, illustrative or clarifying, please share it with someone else who can use it. It’s complicated — but it’s as simple as I could make it. It leaves a lot out. But I hope it also illustrates the ways in which harmful choices at one point in the value chain can be amplified by our own choices later down the line.

Accessibility note: This is a PDF document, and I know it’s not perfect. I’ve tried to set it up with clean labels, high contrast and logical reading order, but the mapping of activities and ethical effects mean that this is just not a linear document, and it might come across a bit scrambled in a screen reader. I’m sorry!

If this is something people find valuable, I will explore ways of depicting it in other accessible formats.

A small selection of examples of these ethics violations in reported news and legal filings:

Data scraping — Theft of copyright: Jones, 2025; Kerr-Wilson et al., 2025; Knibbs, 2024; Mehrotra & Marchman, 2024

Model training — Modern slavery: Haskins, 2024; Open letter from Kenyan data labellers, 2024; Regilme, 2024

Model training — Exploitative gig work: Castaldo, 2024; Weber, 2024

Model development — Corporate political control: Impiombato et al., 2024; Funk et al., 2023

Model development — Financial speculation (venture capital): Adams, 2025; Pymnts, 2025

Model development — Bias and manipulation: Hijab, 2025; Steedman, 2025

Data centres — land use: Hardwitt, 2025; Lapowski, 2025

Data centres — water use: Abdullahi, 2025; Hayes, 2025

Data centres — carbon emissions: Bloomberg, 2024; Calvert, 2024; Jackson & Hodgkinson, 2024; Luccioni et al., 2022

Generative AI adoption — Job roles displaced: Jones, 2025; Kelly, 2025; Mauran, 2025; Muro et al., 2025; Sadler, 2025

Generated content — Information security compromised: Mascellino, 2024; Riley, 2025; Zorz, 2025

Generated content — Copyright infringed: Basseel, 2025; Koebler, 2024; US Copyright Office, 2025

Generated content — Misinformation generated: Dupré, 2023; Lecher, 2024; Quach, 2024

Great start! Here is a source you may find valuable: https://journals.sagepub.com/doi/10.1177/20539517251340603

This article is incredibly helpful in describing issues and solutions.